Stable Diffusion Creators Unleash Video Generator That Dreams Up Any Style

By Mikelle Leow, 08 Feb 2023

Every day, it seems like a new game-changing AI tool steps into the light. The latest comes by way of Runway, an AI video expert that helped build the popular text-to-image Stable Diffusion platform with University of Munich researchers and Stability AI. Runway has been developing video editors for about five years now, but its newest project comes closest to the AI art generators that are making waves today.

Gen-1, the new AI model, is trained to convincingly apply the styles or compositions of image or text prompts to videos.

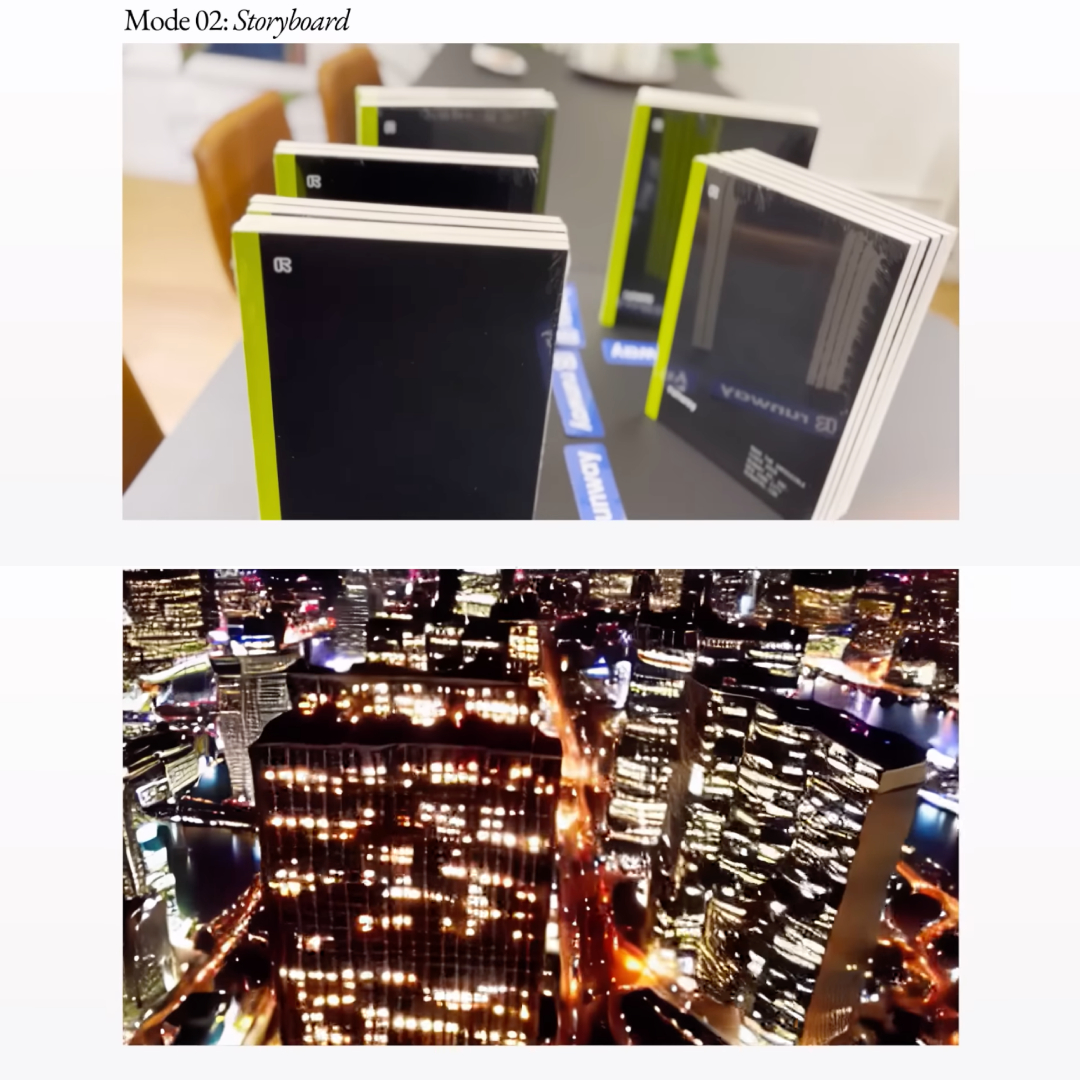

On its website, Runway explains that Gen-1 can handle these in five unique ways. There’s ‘Stylization’, in which a preferred style, like claymation, changes the aesthetic of a video; ‘Storyboard’, where you can generate stylized renders out of regular objects; ‘Mask’, where areas of footage are replaced; ‘Render’, whereby untextured renders are finished by AI; and ‘Customization’, to further edit videos.

Gen-1’s announcement comes just as Google unveiled Dreamix, a similar video generator that conjures up footage with simple text, image, or video inputs. Dreamix is closed to the public at the moment.

Runway’s approach is more open. Valenzuela asserts that Gen-1 is among the first in the market to be developed intimately with filmmakers and VFX editors.

In a promotional video, the company envisions that the technology will bring the world “closer to realizing the future of storytelling.”

It is so close, that Valenzuela believes AI technology will be capable of generating full-feature films very soon.

Gen-1 is currently available on a by-invite-only basis. However, you can join its waitlist to gain access to the tool when it launches in the coming weeks.

[via MIT Technology Review and HungryMinded / Medium, video and screenshots via Runway]