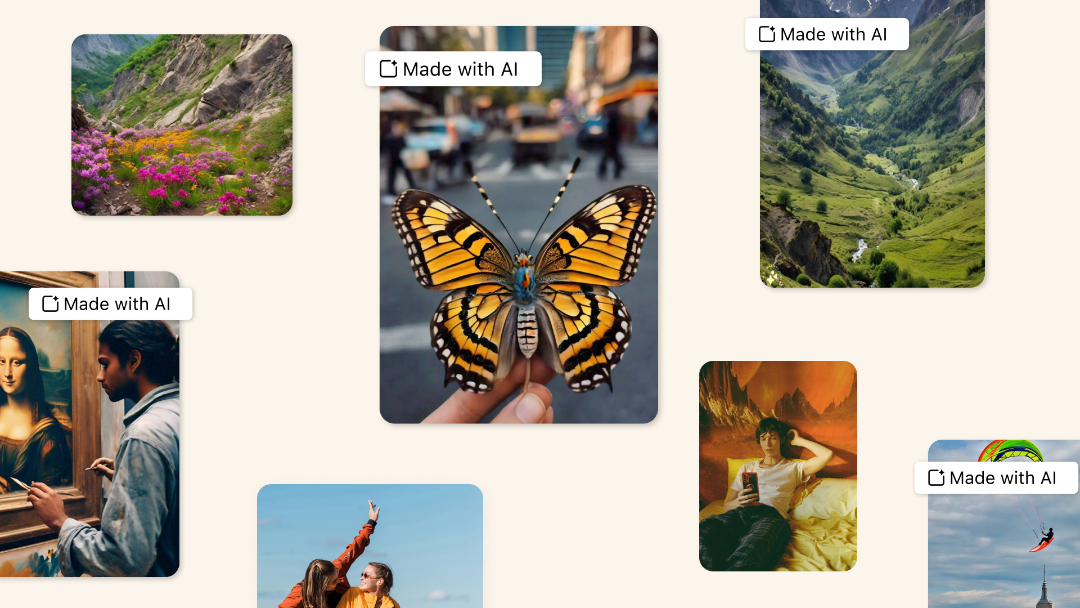

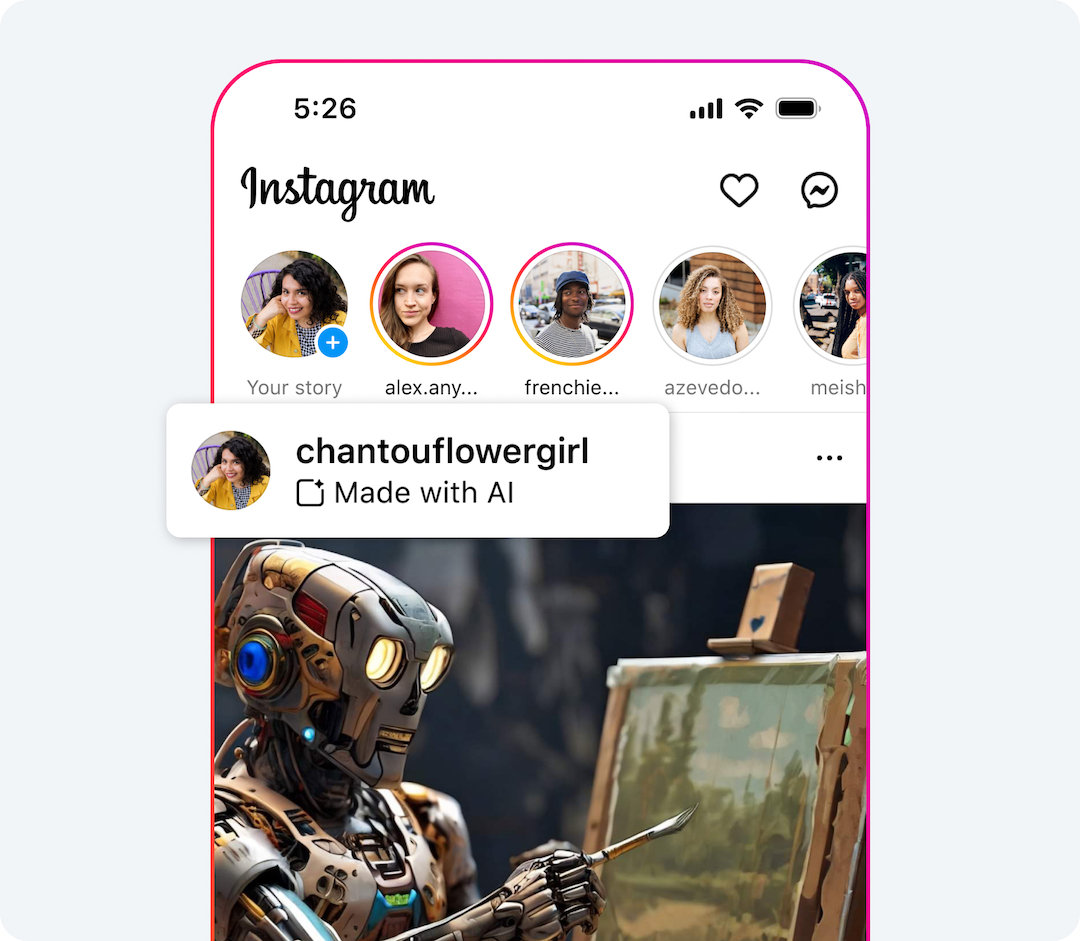

Meta Rolling Out ‘Made With AI’ Labels On Instagram & Facebook

By Mikelle Leow, 10 Apr 2024

Image via Facebook

Meta, the social media giant encompassing Facebook, Instagram, and Threads, is rolling out a new labelling system to flag content created or altered with artificial intelligence. Starting in May 2024, users will see ‘Made with AI’ badges on photos, videos, and audio clips that leverage AI technology. This move comes amid growing concerns about the spread of deepfakes—highly realistic AI-generated videos that can be used to make people appear to say or do things they never did.

The introduction of labels follows pressure from the company’s independent Oversight Board, which urged the company to increase transparency around manipulated media. Previously, Meta’s approach focused on removing deepfakes deemed particularly harmful, but critics argued this wasn’t enough. The ‘Made with AI’ labels offer a middle ground, allowing users to make informed decisions about the content they see.

Under the new policy, content will generally remain on the platform as long as it doesn’t violate other community guidelines, such as those prohibiting hate speech or voter interference.

Image via Facebook

Meta’s vice president of content policy, Monika Bickert, says the company agrees with the argument “that our existing approach is too narrow.” Published in 2020, its manipulated media policy only looked into videos “created or altered by AI to make a person appear to say something they didn’t say.”

“In the last four years, and particularly in the last year, people have developed other kinds of realistic AI-generated content like audio and photos, and this technology is quickly evolving,” Bickert continues. “As the Board noted, it’s equally important to address manipulation that shows a person doing something they didn’t do.”

However, some experts caution that labels alone may not be a silver bullet. AI detection methods are still evolving, and creators of deepfakes could potentially find ways to bypass them. Additionally, the sheer volume of content uploaded to Meta’s platforms daily presents a challenge for effective monitoring.

Image via Facebook

The tech giant says it is phasing in the feature in May and will, in the meantime, hold off on deleting content according to its outdated manipulated video policy. This will give users time to get used to the self-disclosure process, notes Bickert.

The landscape of content consumption is changing fast. Equipping users with the critical thinking skills to identify potential deepfakes and assess the credibility of online content remains crucial. Tools like Meta’s AI labels may help to cultivate an eye for inauthentic qualities in photos and videos.

[via Social Media Today, Tech Xplore, PYMNTS, images via Facebook]